3 Troubleshoot Memory Leaks

If your application's execution time becomes longer and longer, or if the operating system seems to be performing slower and slower, this could be an indication of a memory leak. In other words, virtual memory is being allocated but is not being returned when it is no longer needed. Eventually the application or the system runs out of memory, and the application terminates abnormally.

This chapter contains the following sections:

Debug a Memory Leak Using Java Flight Recorder

The Java Flight Recorder (JFR) is a commercial feature. You can use it for free on developer desktops or laptops, and for evaluation purposes in test, development, and production environments.

However, to enable JFR on a production server, you must have a commercial license. Using the Java Mission Control (JMC) for other purposes on the JDK does not require a commercial license.

To know more about the JFR commercial features and availability, see the product documentation.

To know more about the JFR commercial license, see the license agreement.

The following sections show figures and describe how to debug a memory leak using Java Flight Recorder.

Detect a Memory Leak

Detect memory leaks early and prevent OutOfmemoryErrors using Java Flight Recordings.

Detecting a slow memory leak can be hard. A typical symptom is that the application becomes slower after running for a long time due to frequent garbage collections. Eventually, OutOfmemoryErrors may be seen. However, memory leaks can be detected early, even before a problem occurs using Java Flight Recordings.

Watch if the live set of your application is increasing over time. The live set is the amount of Java heap that is used after an old collection (all objects that are not live have been garbage collected). The live set can be inspected in many ways: run with the -verbosegc option, or connect to the JVM using the JMC JMX Console and look at com.sun.management.GarbageCollectorAggregator MBean. However, another easy approach is to take a flight recording.

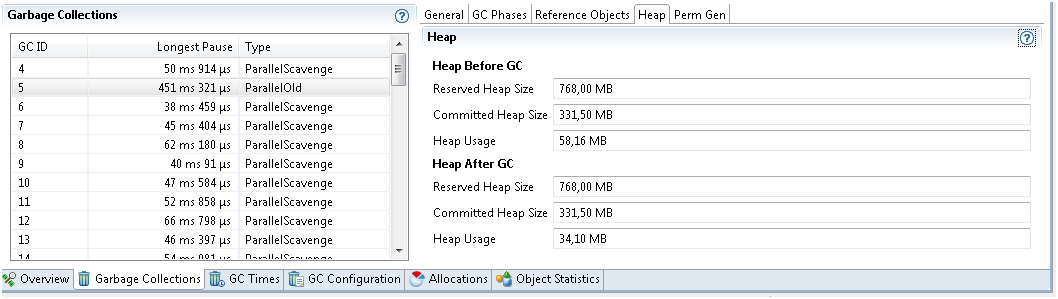

Enable Heap Statistics when you start your recording, which triggers an old collection at the start and at the end of the recording. This may cause a slight latency in the application. However, Heap Statistics generates accurate live set information. If you suspect a rather quick memory leak, then take a profiling recording that runs over, for example, an hour. Click the Memory tab and select the Garbage Collections tab to inspect the first and the last old collections, as shown in Figure 3-1.

Figure 3-1 Debug Memory Leaks - Garbage Collection Tab

Description of "Figure 3-1 Debug Memory Leaks - Garbage Collection Tab"

Select the first old collection, as shown in Figure 3-1, to look at the heap data and heap usage after GC. In this recording, it is 34.10 MB. Now, look at the same data from the last old collection in the list, and see if the live set has grown. Before taking the recording, you must allow the application to start and reach a stable state.

If the leak is slow, you can take a shorter 5-minute recording. Then, take another recording, for example 24 hours later (depending on how fast you suspect the memory leak to be). Obviously, your live set may go up and down, but if you see a steady increase over time, then you could have a memory leak.

Find the Leaking Class

Use the Java Flight Recordings to identify the memory leak.

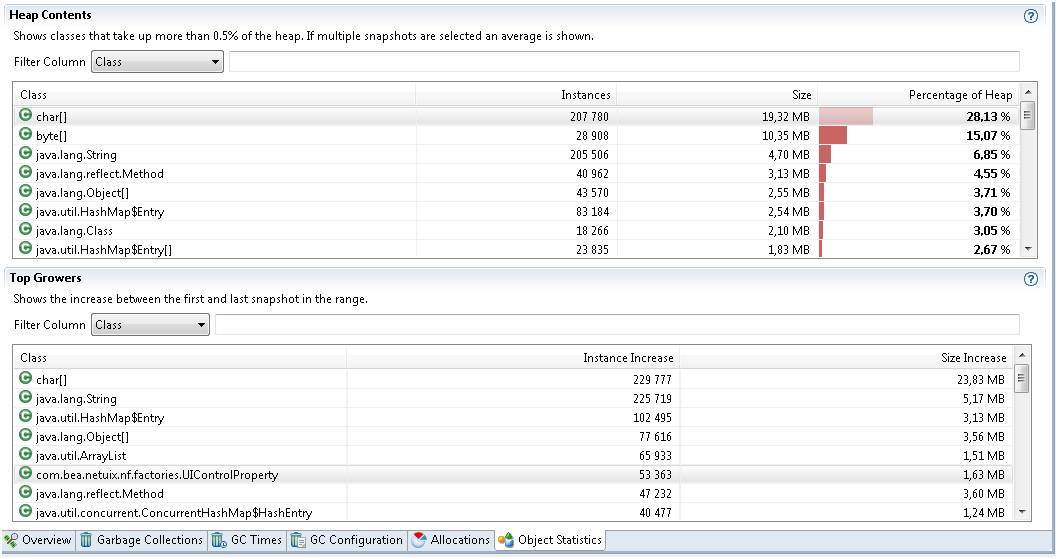

After your recording showing the leak, you can look at the Object Statistics. Look at one long recording, then look at which classes grew the most in heap usage over the recording. If you took several recordings at intervals, then compare the heap contents section, and see which object types have increased the most between the recordings, as shown in Figure 3-2.

Figure 3-2 Debug Memory Leaks - Find Leaking Class

Description of "Figure 3-2 Debug Memory Leaks - Find Leaking Class"

Especially, watch the classes that are not part of the standard library. For example, you will often see Char arrays as one of the top growers. This is due to many Strings being allocated; therefore, watch out for objects that keep these Strings alive. If you have a class that has 10 Strings as members, then the object itself will not use too much heap. The heap will be used by the Strings, which mostly contains pointers to the Char arrays. Therefore, it is good to sort on the number of instances and not the size of the objects. If one of your application class has many instances, then it may be those objects that keep other objects alive.

Find the Leak

Tips to identify the memory leak using the additional information using the Java Flight Recordings.

Some additional information can be found using Java Flight Recordings.

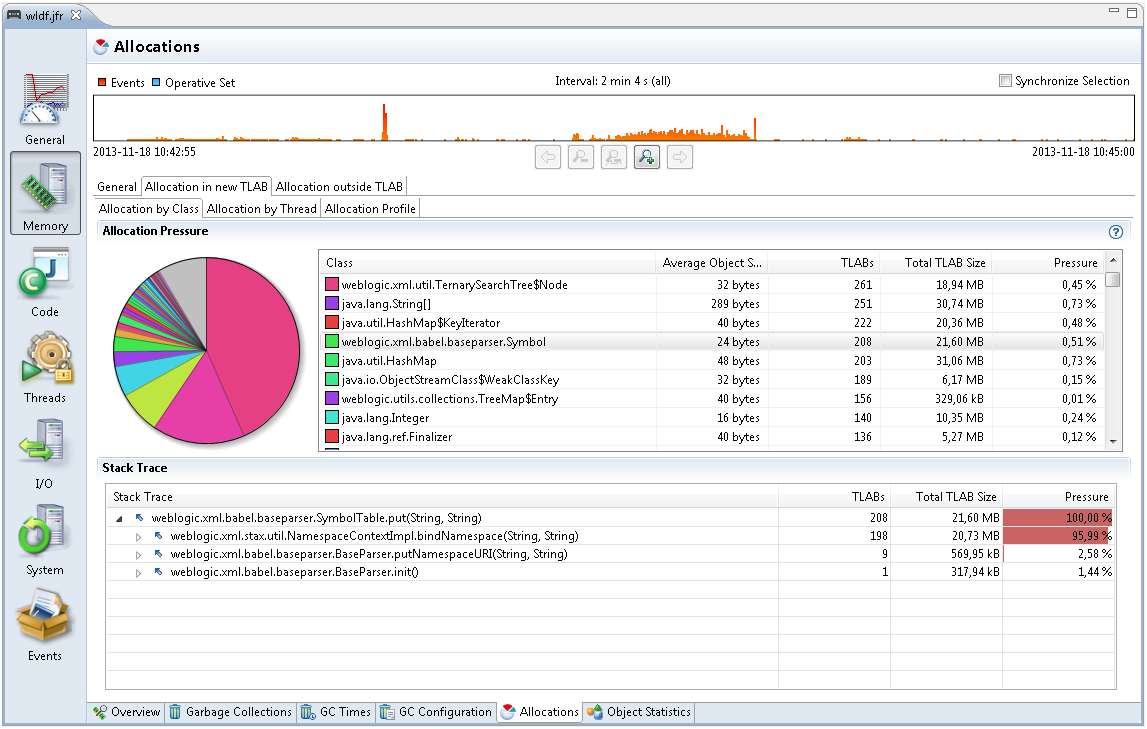

Look at the Allocations sub tab, as shown in Figure 3-3, for some samples of where objects were allocated.

Figure 3-3 Debug Memory Leaks - Allocations tab

Description of "Figure 3-3 Debug Memory Leaks - Allocations tab"

If you except a specific class leak, look at the Allocation in new TLAB tab. Check the class samples being allocated. If the leak is slow, there may be a few allocations of this object and may be no samples. Also, it may be that only a specific allocation site is leading to a leak. To summarize, this is not guaranteed to lead you to the right allocation stack trace for the leak, but it may give vital clues.

Understand the OutOfMemoryError Exception

java.lang.OutOfMemoryError error is thrown when there is insufficient space to allocate an object in the Java heap.

One common indication of a memory leak is the java.lang.OutOfMemoryError exception. In this case, The garbage collector cannot make space available to accommodate a new object, and the heap cannot be expanded further. Also, this error may be thrown when there is insufficient native memory to support the loading of a Java class. In a rare instance, a java.lang.OutOfMemoryError can be thrown when an excessive amount of time is being spent doing garbage collection, and little memory is being freed.

When a java.lang.OutOfMemoryError exception is thrown, a stack trace is also printed.

The java.lang.OutOfMemoryError exception can also be thrown by native library code when a native allocation cannot be satisfied (for example, if swap space is low).

An early step to diagnose an OutOfMemoryError exception is to determine the cause of the exception. Was it thrown because the Java heap is full, or because the native heap is full? To help you find the cause, the text of the exception includes a detail message at the end, as shown in the following exceptions.

- Exception in thread thread_name: java.lang.OutOfMemoryError: Java heap space

-

Cause: The detailed message Java heap space indicates that an object could not be allocated in the Java heap. This error does not necessarily imply a memory leak. The problem can be as simple as a configuration issue, where the specified heap size (or the default size, if it is not specified) is insufficient for the application.

In other cases, and in particular for a long-lived application, the message might be an indication that the application is unintentionally holding references to objects, and this prevents the objects from being garbage collected. This is the Java language equivalent of a memory leak. Note: The APIs that are called by an application could also be unintentionally holding object references.

One other potential source of this error arises with applications that make excessive use of finalizers. If a class has a

finalizemethod, then objects of that type do not have their space reclaimed at garbage collection time. Instead, after garbage collection, the objects are queued for finalization, which occurs at a later time. In the Oracle Sun implementation, finalizers are executed by a daemon thread that services the finalization queue. If the finalizer thread cannot keep up with the finalization queue, then the Java heap could fill up, and this type ofOutOfMemoryErrorexception would be thrown. One scenario that can cause this situation is when an application creates high-priority threads that cause the finalization queue to increase at a rate that is faster than the rate at which the finalizer thread is servicing that queue. - Exception in thread thread_name: java.lang.OutOfMemoryError: GC Overhead limit exceeded

-

Cause: The detail message "GC overhead limit exceeded" indicates that the garbage collector is running all the time, and the Java program is making very slow progress. After a garbage collection, if the Java process is spending more than approximately 98% of its time doing garbage collection and if it is recovering less than 2% of the heap and has been doing so for the last 5 (compile time constant) consecutive garbage collections, then a

java.lang.OutOfMemoryErroris thrown. This exception is typically thrown because the amount of live data barely fits into the Java heap having little free space for new allocations. - Exception in thread thread_name: java.lang.OutOfMemoryError: Requested array size exceeds VM limit

-

Cause: The detail message "Requested array size exceeds VM limit" indicates that the application (or APIs used by that application) attempted to allocate an array that is larger than the heap size. For example, if an application attempts to allocate an array of 512 MB, but the maximum heap size is 256 MB, then

OutOfMemoryErrorwill be thrown with the reason “Requested array size exceeds VM limit." - Exception in thread thread_name: java.lang.OutOfMemoryError: Metaspace

-

Cause: Java class metadata (the virtual machines internal presentation of Java class) is allocated in native memory (referred to here as metaspace). If metaspace for class metadata is exhausted, a

java.lang.OutOfMemoryErrorexception with a detailMetaSpaceis thrown. The amount of metaspace that can be used for class metadata is limited by the parameterMaxMetaSpaceSize, which is specified on the command line. When the amount of native memory needed for a class metadata exceedsMaxMetaSpaceSize, ajava.lang.OutOfMemoryErrorexception with a detailMetaSpaceis thrown. - Exception in thread thread_name: java.lang.OutOfMemoryError: request size bytes for reason. Out of swap space?

-

Cause: The detail message "request size bytes for reason. Out of swap space?" appears to be an

OutOfMemoryErrorexception. However, the Java HotSpot VM code reports this apparent exception when an allocation from the native heap failed and the native heap might be close to exhaustion. The message indicates the size (in bytes) of the request that failed and the reason for the memory request. Usually the reason is the name of the source module reporting the allocation failure, although sometimes it is the actual reason. - Exception in thread thread_name: java.lang.OutOfMemoryError: Compressed class space

-

Cause: On 64-bit platforms, a pointer to class metadata can be represented by 32-bit offset (with

UseCompressedOops). This is controlled by the command line flagUseCompressedClassPointers(on by default). If theUseCompressedClassPointersis used, the amount of space available for class metadata is fixed at the amountCompressedClassSpaceSize. If the space needed forUseCompressedClassPointersexceedsCompressedClassSpaceSize, ajava.lang.OutOfMemoryErrorwith detail Compressed class space is thrown. - Exception in thread thread_name: java.lang.OutOfMemoryError: reason stack_trace_with_native_method

-

Cause: If the detail part of the error message is "reason stack_trace_with_native_method, and a stack trace is printed in which the top frame is a native method, then this is an indication that a native method, has encountered an allocation failure. The difference between this and the previous message is that the allocation failure was detected in a Java Native Interface (JNI) or native method rather than in the JVM code.

Troubleshoot a Crash Instead of OutOfMemoryError

Use the information in the fatal error log or the crash dump to troubleshoot a crash.

Sometimes an application crashes soon after an allocation from the native heap fails. This occurs with native code that does not check for errors returned by the memory allocation functions.

For example, the malloc system call returns null if there is no memory available. If the return from malloc is not checked, then the application might crash when it attempts to access an invalid memory ___location. Depending on the circumstances, this type of issue can be difficult to locate.

However, sometimes the information from the fatal error log or the crash dump is sufficient to diagnose this issue. The fatal error log is covered in detail in Fatal Error Log. If the cause of the crash is an allocation failure, then determine the reason for the allocation failure. As with any other native heap issue, the system might be configured with the insufficient amount of swap space, another process on the system might be consuming all memory resources, or there might be a leak in the application (or in the APIs that it calls) that causes the system to run out of memory.

Diagnose Leaks in Java Language Code

Use the NetBeans profiler to diagnose leaks in the Java language code.

Diagnosing leaks in the Java language code can be difficult. Usually, it requires very detailed knowledge of the application. In addition, the process is often iterative and lengthy. This section provides information about the tools that you can use to diagnose memory leaks in the Java language code.

Note:

Beside the tools mentioned in this section, a large number of third-party memory debugger tools are available. The Eclipse Memory Analyzer Tool (MAT), and YourKit (www.yourkit.com) are two examples of commercial tools with memory debugging capabilities. There are many others, and no specific product is recommended.

The following utilities used to diagnose leaks in the Java language code.

The following sections describe the other ways to diagnose leaks in the Java language code.

Get a Heap Histogram

Different commands and options available to get a heap histogram to identify memory leaks.

You can try to quickly narrow down a memory leak by examining the heap histogram. You can get a heap histogram in several ways:

Monitor the Objects Pending Finalization

Different commands and options available to monitor the objects pending finalization.

When the OutOfMemoryError exception is thrown with the "Java heap space" detail message, the cause can be excessive use of finalizers. To diagnose this, you have several options for monitoring the number of objects that are pending finalization:

- The JConsole management tool can be used to monitor the number of objects that are pending finalization. This tool reports the pending finalization count in the memory statistics on the Summary tab pane. The count is approximate, but it can be used to characterize an application and understand if it relies a lot on finalization.

- On Oracle Solaris and Linux operating systems, the

jmaputility can be used with the-finalizerinfooption to print information about objects awaiting finalization. - An application can report the approximate number of objects pending finalization using the

getObjectPendingFinalizationCountmethod of thejava.lang.management.MemoryMXBeanclass. Links to the API documentation and example code can be found in Custom Diagnostic Tools. The example code can easily be extended to include the reporting of the pending finalization count.

Diagnose Leaks in Native Code

Several techniques can be used to find and isolate native code memory leaks. In general, there is no ideal solution for all platforms.

The following are some techniques to diagnose leaks in native code.

Track All Memory Allocation and Free Calls

Tools available to track all memory allocation and use of that memory.

A very common practice is to track all allocation and free calls of the native allocations. This can be a fairly simple process or a very sophisticated one. Many products over the years have been built up around the tracking of native heap allocations and the use of that memory.

Tools like IBM Rational Purify and the runtime checking functionality of Sun Studio dbx debugger can be used to find these leaks in normal native code situations and also find any access to native heap memory that represents assignments to un-initialized memory or accesses to freed memory. See Find Leaks with the dbx Debugger.

Not all these types of tools will work with Java applications that use native code, and usually these tools are platform-specific. Because the virtual machine dynamically creates code at runtime, these tools can incorrectly interpret the code and fail to run at all, or give false information. Check with your tool vendor to ensure that the version of the tool works with the version of the virtual machine you are using.

See sourceforge for many simple and portable native memory leak detecting examples. Most libraries and tools assume that you can recompile or edit the source of the application and place wrapper functions over the allocation functions. The more powerful of these tools allow you to run your application unchanged by interposing over these allocation functions dynamically. This is the case with the library libumem.so first introduced in the Oracle Solaris 9 operating system update 3; see Find Leaks with the libumem Tool.

Track All Memory Allocations in the JNI Library

If you write a JNI library, then consider creating a localized way to ensure that your library does not leak memory, by using a simple wrapper approach.

The procedure in the following example is an easy localized allocation tracking approach for a JNI library. First, define the following lines in all source files.

#include <stdlib.h> #define malloc(n) debug_malloc(n, __FILE__, __LINE__) #define free(p) debug_free(p, __FILE__, __LINE__)

Then, you can use the functions in the following example to watch for leaks.

/* Total bytes allocated */

static int total_allocated;

/* Memory alignment is important */

typedef union { double d; struct {size_t n; char *file; int line;} s; } Site;

void *

debug_malloc(size_t n, char *file, int line)

{

char *rp;

rp = (char*)malloc(sizeof(Site)+n);

total_allocated += n;

((Site*)rp)->s.n = n;

((Site*)rp)->s.file = file;

((Site*)rp)->s.line = line;

return (void*)(rp + sizeof(Site));

}

void

debug_free(void *p, char *file, int line)

{

char *rp;

rp = ((char*)p) - sizeof(Site);

total_allocated -= ((Site*)rp)->s.n;

free(rp);

}

The JNI library would then need to periodically (or at shutdown) check the value of the total_allocated variable to verify that it made sense. The preceding code could also be expanded to save in a linked list the allocations that remained, and report where the leaked memory was allocated. This is a localized and portable way to track memory allocations in a single set of sources. You would need to ensure that debug_free() was called only with the pointer that came from debug_malloc(), and you would also need to create similar functions for realloc(), calloc(), strdup(), and so forth, if they were used.

A more global way to look for native heap memory leaks involves interposition of the library calls for the entire process.

Track Memory Allocation with Operating System Support

Tools available for tracking memory allocation in an operating system.

Most operating systems include some form of global allocation tracking support.

- On Windows, search the MSDN library for debug support. The Microsoft C++ compiler has the

/Mdand/Mddcompiler options that will automatically include extra support for tracking memory allocation. - Linux systems have tools such as

mtraceandlibnjamdto help in dealing with allocation tracking. - The Oracle Solaris operating system provides the

watchmalloctool. Oracle Solaris 9 operating system update 3 also introduced thelibumemtool. See Find Leaks with the libumem Tool.

Find Leaks with the dbx Debugger

The dbx debugger includes the Runtime Checking (RTC) functionality, which can find leaks. The dbx debugger is part of Oracle Solaris Studio and also available for Linux.

The following example shows a sample dbx session.

$ dbx ${java_home}/bin/java Reading java Reading ld.so.1 Reading libthread.so.1 Reading libdl.so.1 Reading libc.so.1 (dbx) dbxenv rtc_inherit on (dbx) check -leaks leaks checking - ON (dbx) run HelloWorld Running: java HelloWorld (process id 15426) Reading rtcapihook.so Reading rtcaudit.so Reading libmapmalloc.so.1 Reading libgen.so.1 Reading libm.so.2 Reading rtcboot.so Reading librtc.so RTC: Enabling Error Checking... RTC: Running program... dbx: process 15426 about to exec("/net/bonsai.sfbay/export/home2/user/ws/j2se/build/solaris-i586/bin/java") dbx: program "/net/bonsai.sfbay/export/home2/user/ws/j2se/build/solaris-i586/bin/java" just exec'ed dbx: to go back to the original program use "debug $oprog" RTC: Enabling Error Checking... RTC: Running program... t@1 (l@1) stopped in main at 0x0805136d 0x0805136d: main : pushl %ebp (dbx) when dlopen libjvm { suppress all in libjvm.so; } (2) when dlopen libjvm { suppress all in libjvm.so; } (dbx) when dlopen libjava { suppress all in libjava.so; } (3) when dlopen libjava { suppress all in libjava.so; } (dbx) cont Reading libjvm.so Reading libsocket.so.1 Reading libsched.so.1 Reading libCrun.so.1 Reading libm.so.1 Reading libnsl.so.1 Reading libmd5.so.1 Reading libmp.so.2 Reading libhpi.so Reading libverify.so Reading libjava.so Reading libzip.so Reading en_US.ISO8859-1.so.3 hello world hello world Checking for memory leaks... Actual leaks report (actual leaks: 27 total size: 46851 bytes) Total Num of Leaked Allocation call stack Size Blocks Block Address ========== ====== =========== ======================================= 44376 4 - calloc < zcalloc 1072 1 0x8151c70 _nss_XbyY_buf_alloc < get_pwbuf < _getpwuid < GetJavaProperties < Java_java_lang_System_initProperties < 0xa740a89a< 0xa7402a14< 0xa74001fc 814 1 0x8072518 MemAlloc < CreateExecutionEnvironment < main 280 10 - operator new < Thread::Thread 102 1 0x8072498 _strdup < CreateExecutionEnvironment < main 56 1 0x81697f0 calloc < Java_java_util_zip_Inflater_init < 0xa740a89a< 0xa7402a6a< 0xa7402aeb< 0xa7402a14< 0xa7402a14< 0xa7402a14 41 1 0x8072bd8 main 30 1 0x8072c58 SetJavaCommandLineProp < main 16 1 0x806f180 _setlocale < GetJavaProperties < Java_java_lang_System_initProperties < 0xa740a89a< 0xa7402a14< 0xa74001fc< JavaCalls::call_helper < os::os_exception_wrapper 12 1 0x806f2e8 operator new < instanceKlass::add_dependent_nmethod < nmethod::new_nmethod < ciEnv::register_method < Compile::Compile #Nvariant 1 < C2Compiler::compile_method < CompileBroker::invoke_compiler_on_method < CompileBroker::compiler_thread_loop 12 1 0x806ee60 CheckJvmType < CreateExecutionEnvironment < main 12 1 0x806ede8 MemAlloc < CreateExecutionEnvironment < main 12 1 0x806edc0 main 8 1 0x8071cb8 _strdup < ReadKnownVMs < CreateExecutionEnvironment < main 8 1 0x8071cf8 _strdup < ReadKnownVMs < CreateExecutionEnvironment < main

The output shows that the dbx debugger reports memory leaks if memory is not freed at the time the process is about to exit. However, memory that is allocated at initialization time and needed for the life of the process is often never freed in native code. Therefore, in such cases, the dbx debugger can report memory leaks that are not really leaks.

Note:

The previous example used twosuppress commands to suppress the leaks reported in the virtual machine: libjvm.so and the Java support library, libjava.so.Find Leaks with the libumem Tool

First introduced in the Oracle Solaris 9 operating system update 3, the libumem.so library, and the modular debugger mdb can be used to debug memory leaks.

Before using libumem, you must preload the libumem library and set an environment variable, as shown in the following example.

$ LD_PRELOAD=libumem.so $ export LD_PRELOAD $ UMEM_DEBUG=default $ export UMEM_DEBUG

Now, run the Java application, but stop it before it exits. The following example uses truss to stop the process when it calls the _exit system call.

$ truss -f -T _exit java MainClass arguments

At this point you can attach the mdb debugger, as shown in the following example.

$ mdb -p pid >::findleaks

The ::findleaks command is the mdb command to find memory leaks. If a leak is found, then this command prints the address of the allocation call, buffer address, and nearest symbol.

It is also possible to get the stack trace for the allocation that resulted in the memory leak by dumping the bufctl structure. The address of this structure can be obtained from the output of the ::findleaks command.

See analyzing memory leaks using libumem for troubleshooting the cause for a memory leak.